Resolve-Ables: Visualizing Phenomena Through Light Painting

Chris Hill

(Special thanks to Casey Hunt)

Master Thesis at University of Colorado, Boulder

Introduction:

The Resolve-Ables project is a collection of wearable devices that enable visualization of phenomena we can’t see with our biological senses. In our environments, we are surrounded by fields and waves that we can’t observe or interact with. This project sets out to design tools that can create artistic representations of phenomena through different, sensing, visual, and photographic techniques.

This project is a result of my Master’s thesis from the ATLAS Institute at the University of Colorado, Boulder. I came into the ATLAS program as a Ph.D. student who wanted to research and develop human augmentation interfaces. This thesis is a representation of my interests that have been refined during my time in the program and the technical skills I have developed.

Resolve-Ables:

The name Resolve-Ables comes from the ability to resolve objects in a microscope, making qualities of that object visible. The “ables” part is because of the wearable design of the devices.

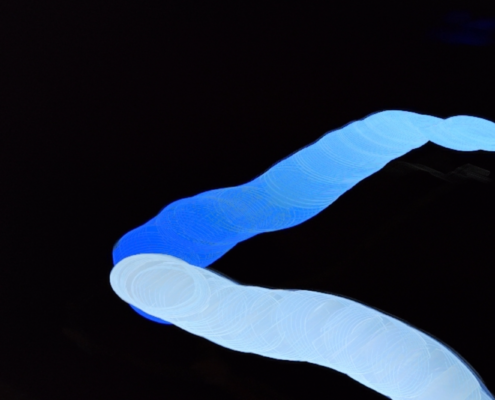

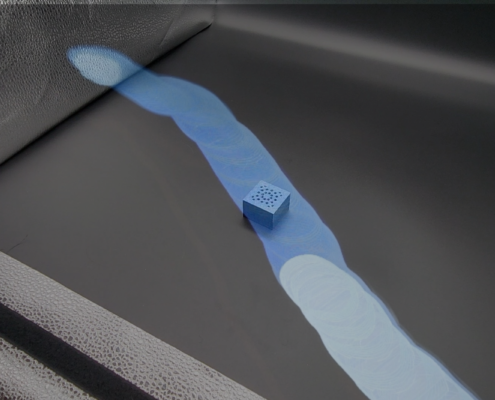

Resolve-Ables have a focus on visualizing phenomena through wearable devices. The technique that I chose for the visualizations is light painting, a photographic technique that uses a camera’s long exposure and a moving light source to create visual art. The experimental nature of Resolve-Ables encouraged me to explore several different technical concepts simultaneously. I knew that if I wanted to make Resolve-Ables a thing I needed to create several examples of the concept.

My strategy when creating Resolve-Ables was to partition the project into three main devices:

- Device 1 is a wearable that can detect magnetic fields to actuate LED on the hand.

- Device 2 is an artificial (AI) nose that can visualize the classifier’s scent prediction.

- Device 3 is a system that can visualize ultrasonic frequencies with a p5.js script.

Background and Context:

The catalyst of this project was a discussion I had with my advisor Daniel Leithinger back in September, about this project called Immaterials by Timo Arnall, Jørn Knutsen, and Einar Sneve Martinussen. This group used LED wands to visualize RFID scanner fields and wifi strength (pictured below)

Several other projects have also inspired the types of visualizations I want to create as part of this thesis. Projects like the electromagnetic field (EMF) Light Paintings by Luke Sturgeon and Shamik Ray (Figure 2, top left), and light paintings by Anthony Devincenzi (Figure 2, top right) have made me interested in exploring objects for invisible phenomena. And projects like Location-based Light Painting by Phillipp Schmitt (Figure 3, bottom left) and Light Painting Acceleration by Jake Ingman (figure 3, bottom right) have encouraged me to explore different environments to create light paintings.

Figure 2: Photos by Luke Sturgeon and Shamik Ray (top left), and Anthony Devincenzi (top right). Photos by Phillipp Schmitt (Bottom left) and Jake Ingman (bottom right).

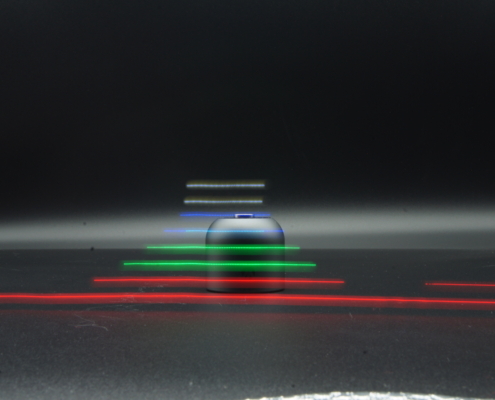

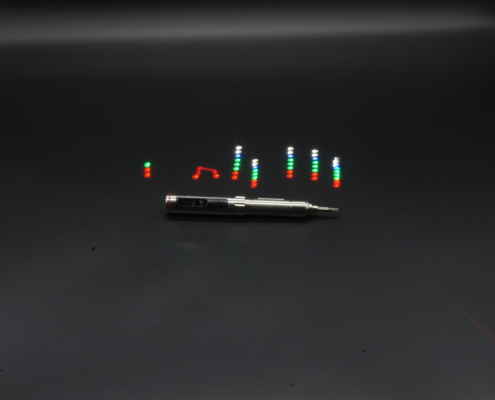

Exploration 1: Visualize Magnetic FIelds With a Light Painting Wearable

Introduction

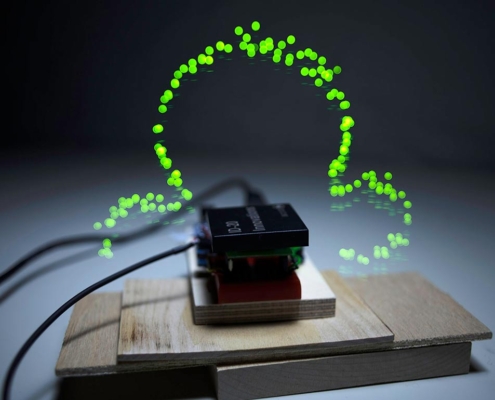

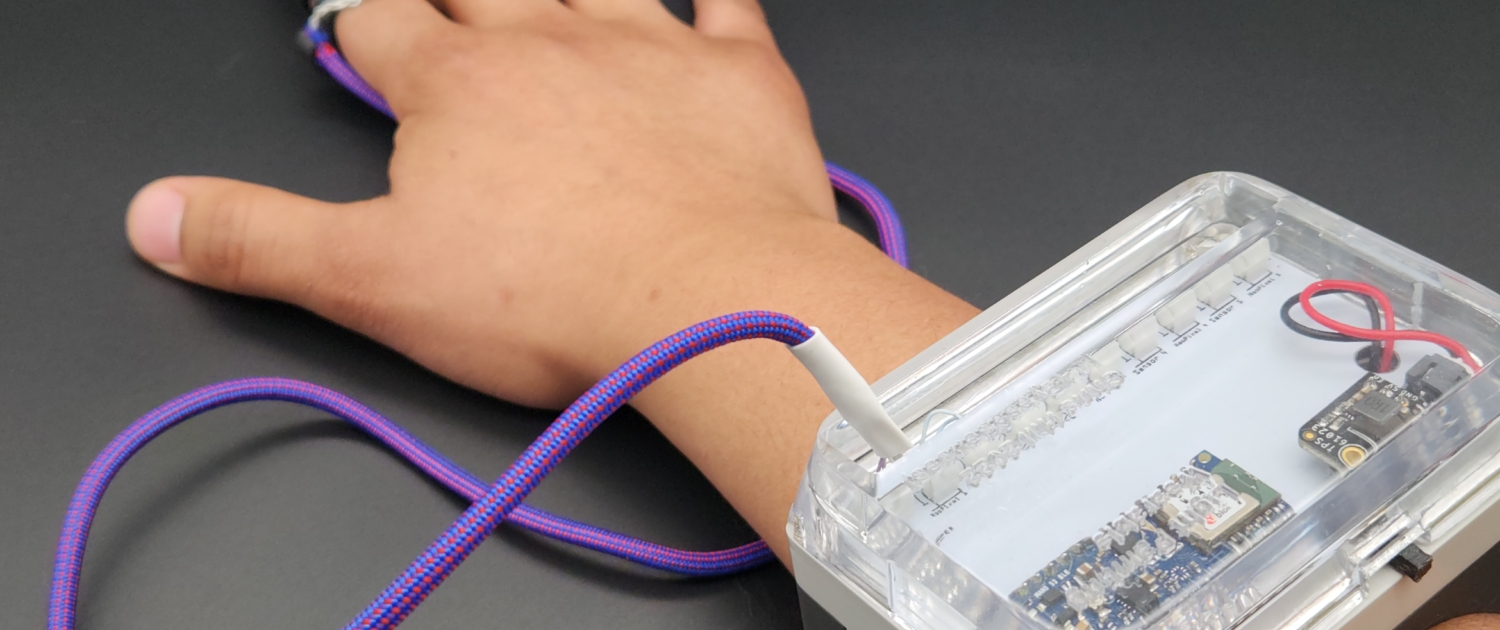

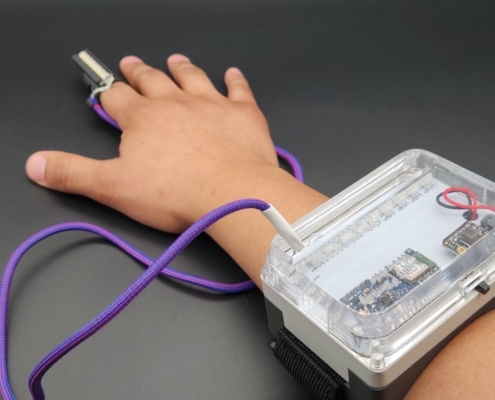

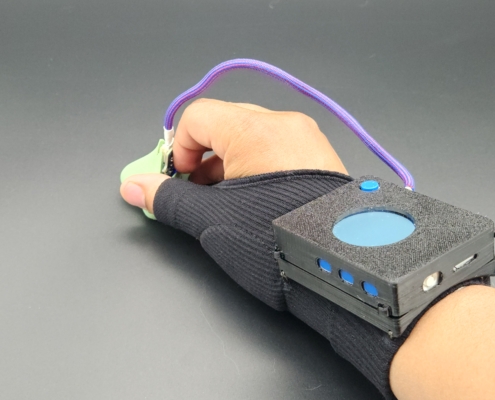

Ever since I got my first magnet implant, I’ve been obsessed with magnetic fields. I wanted a way to share the fields that I sense with other people. My idea is to visualize magnetic fields through a light painting wearable. When a magnetic field is detected with a GMR sensor, a NeoPixel stick will light up to represent the field strength, and when photographed using long exposure you can visualize different strengths of the field. The way the NeoPixels lights up can be reprogrammed to create several interesting effects (blinking, colors, number of pixels lit up, etc…) to create visually interesting combinations.

Design:

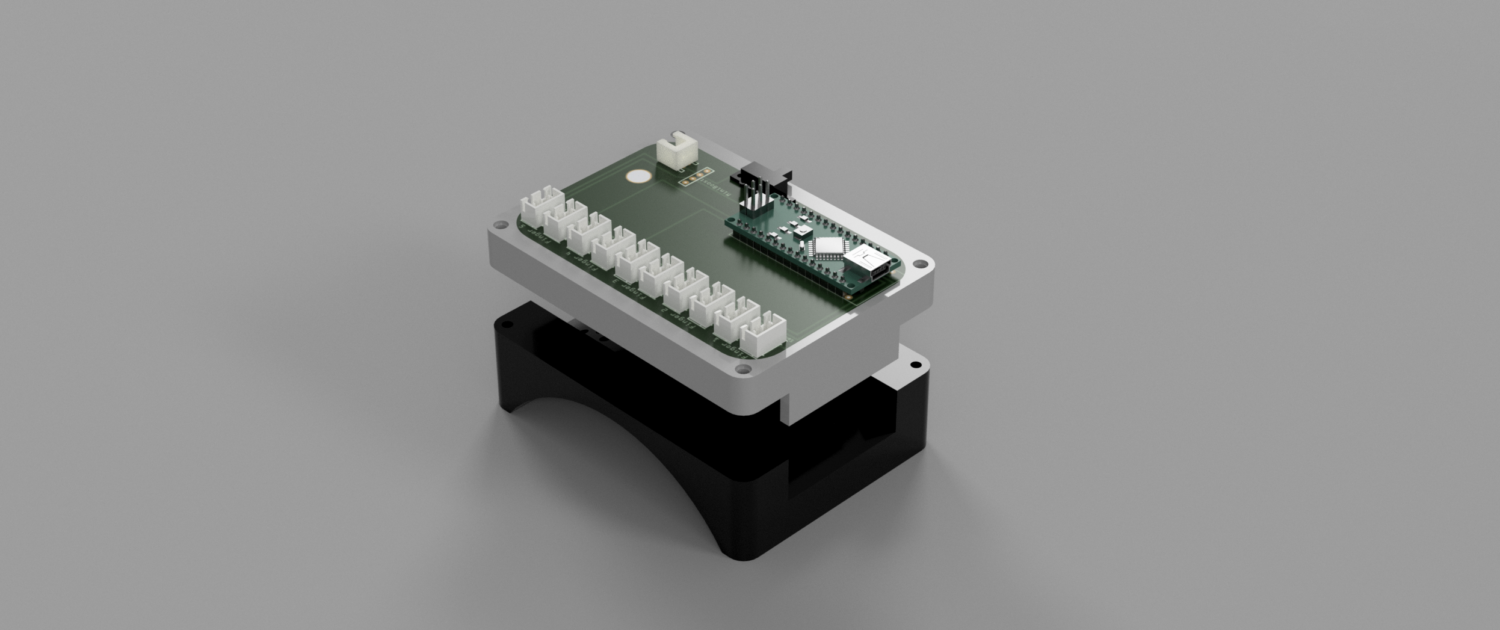

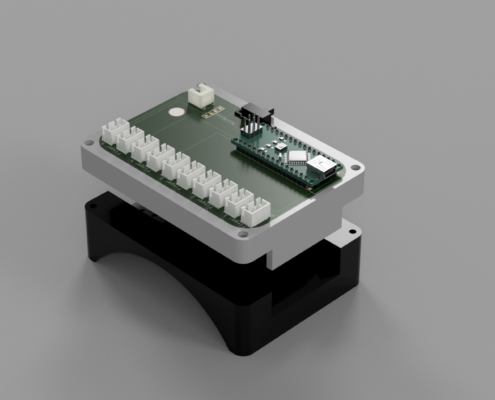

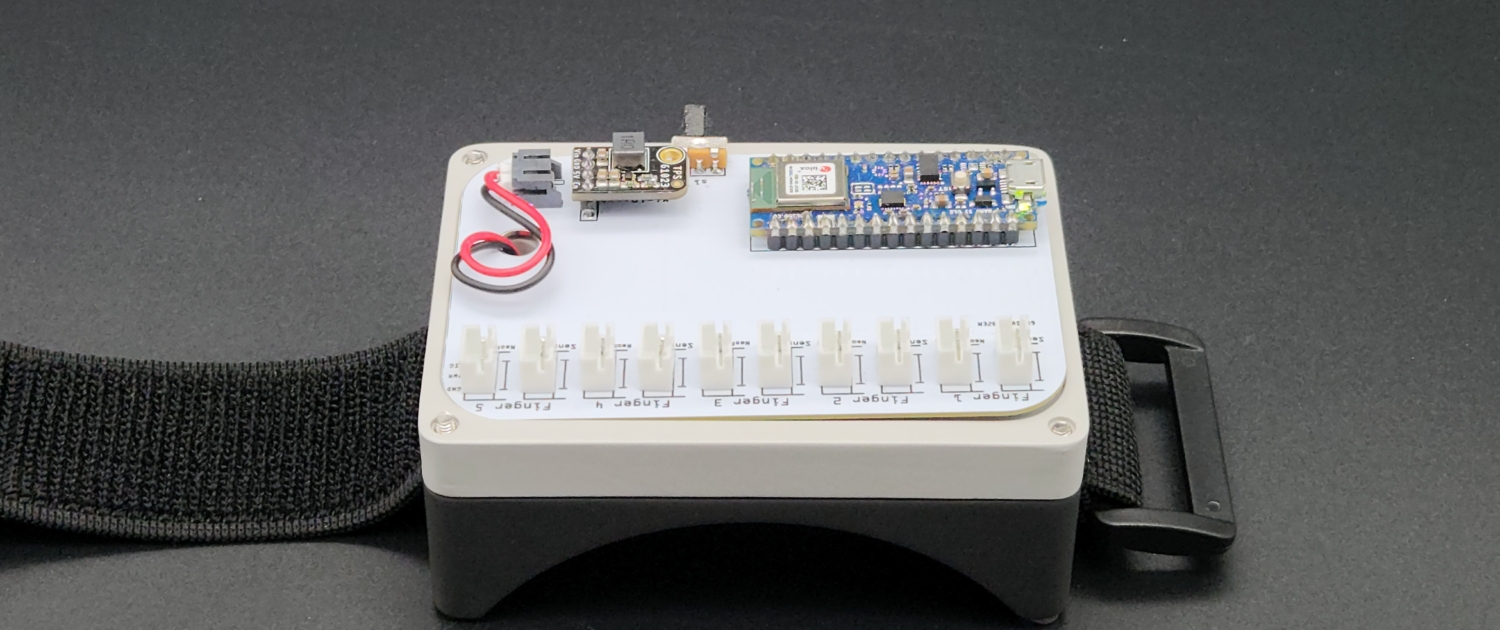

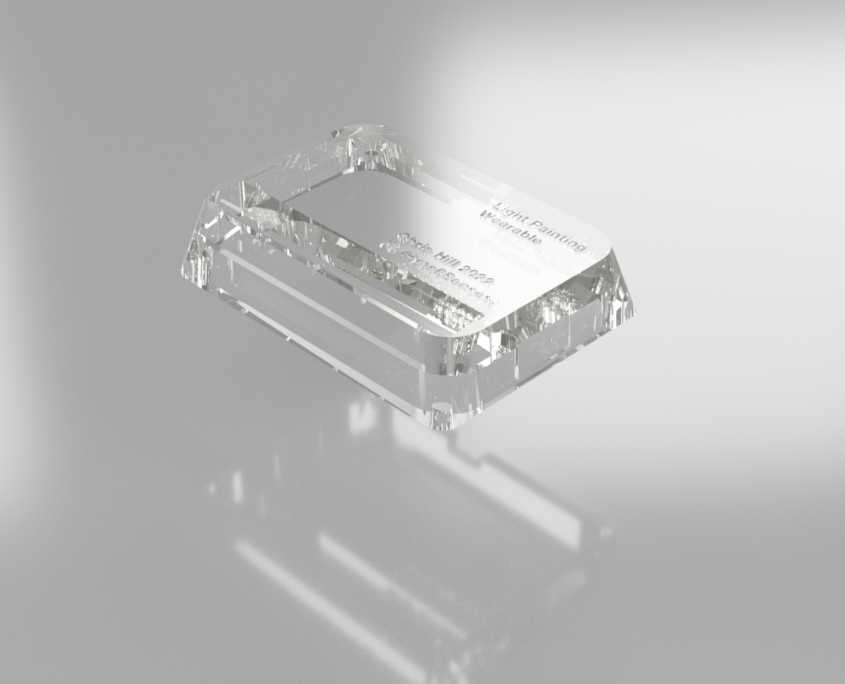

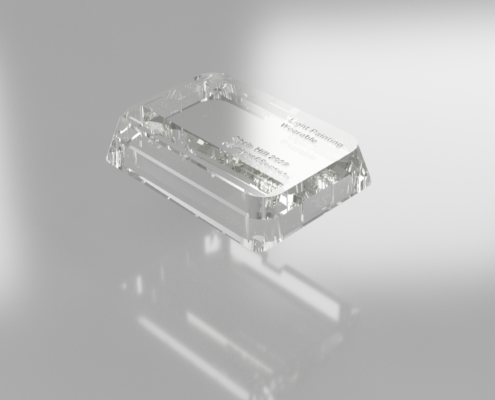

Custom PCB:

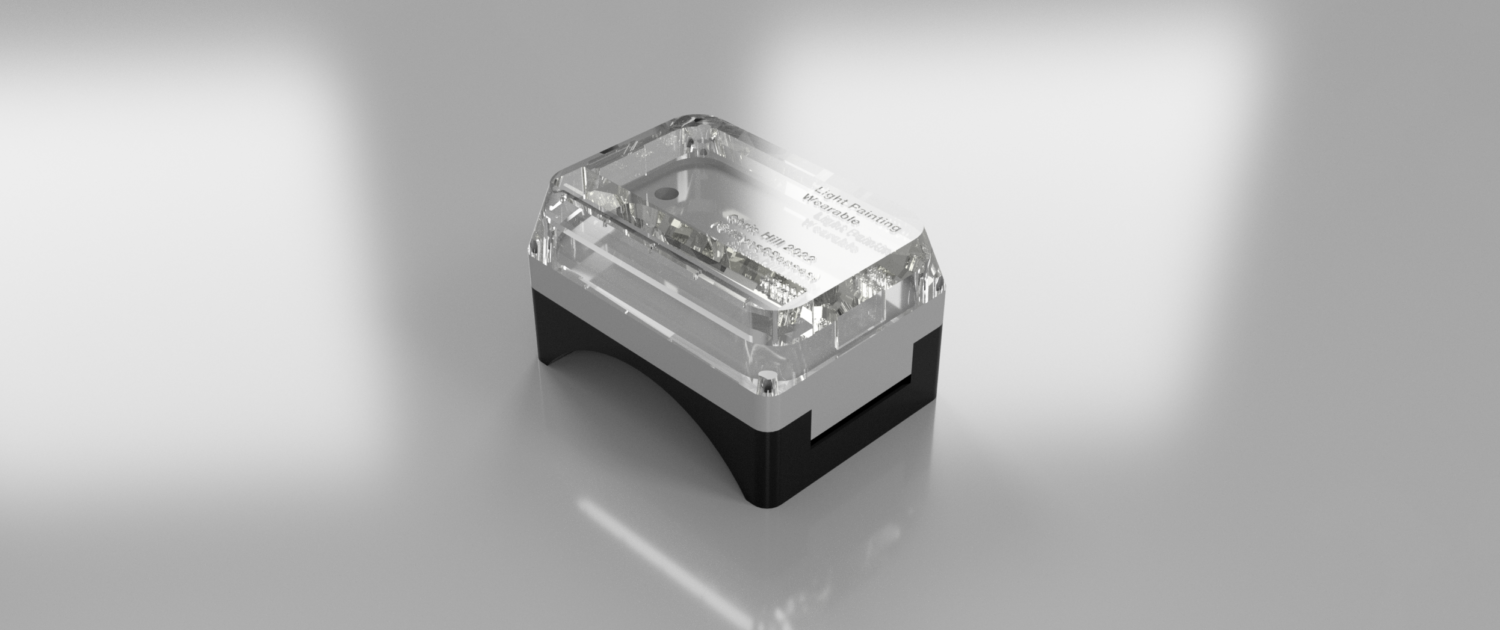

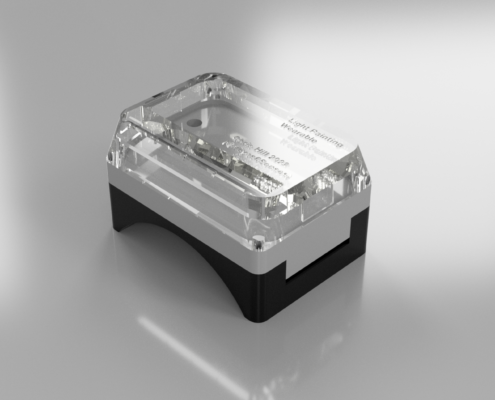

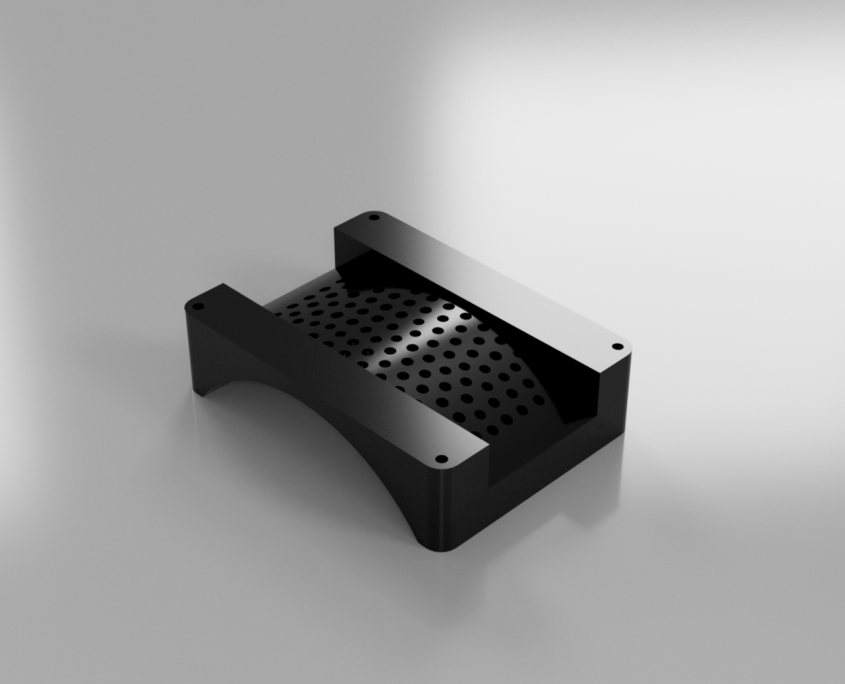

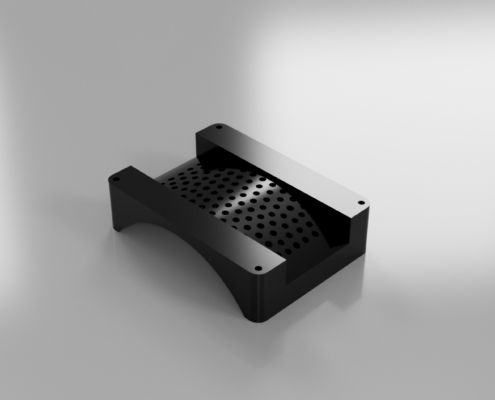

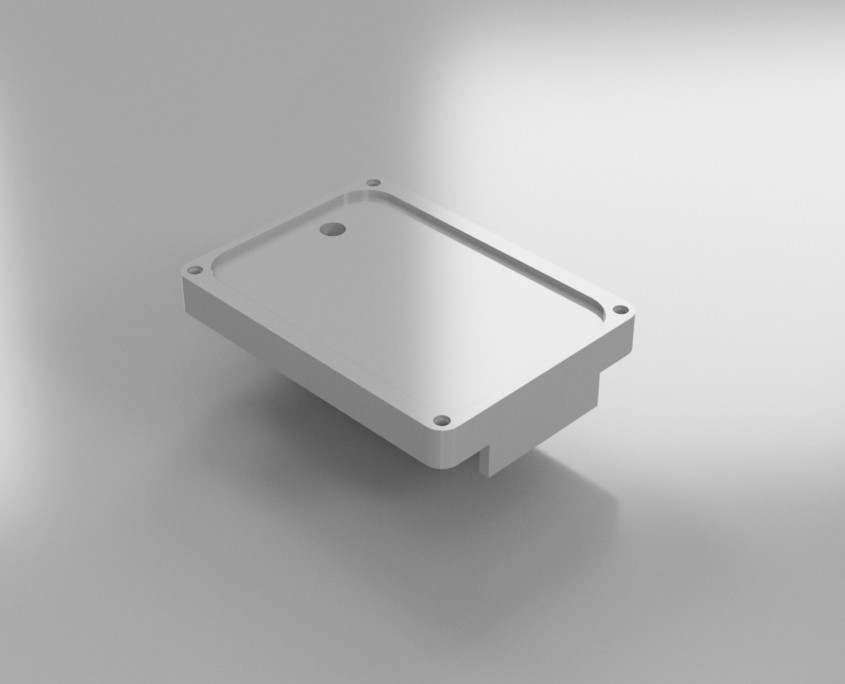

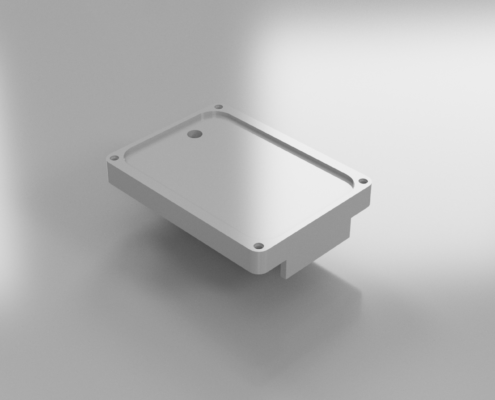

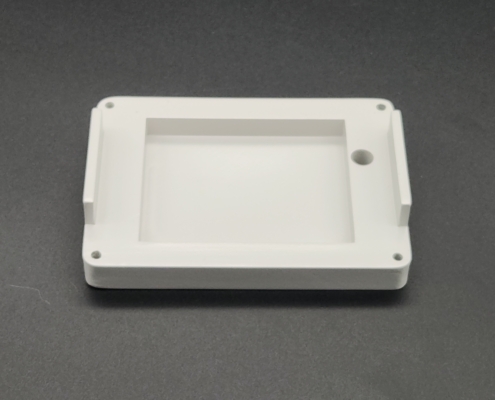

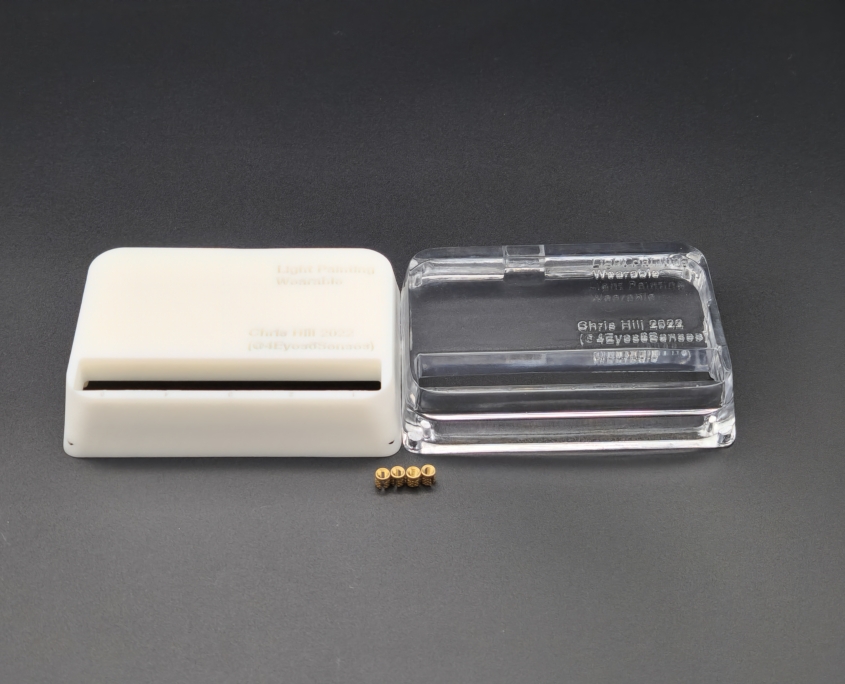

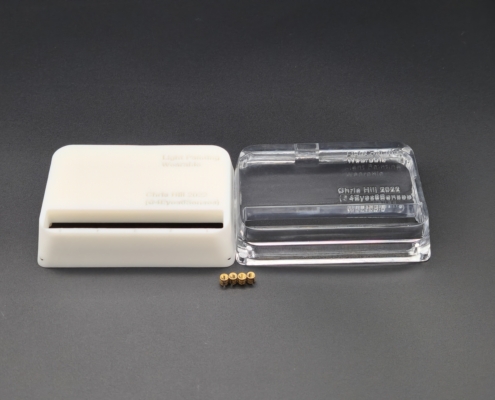

Housing:

Finished Device:

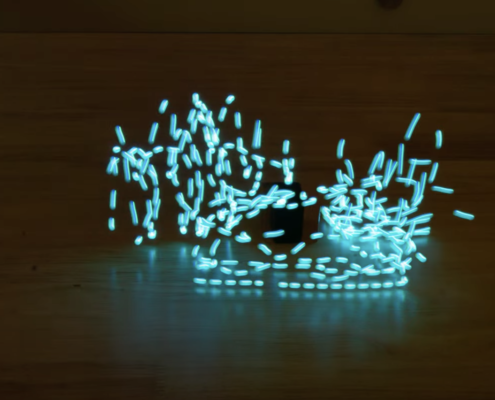

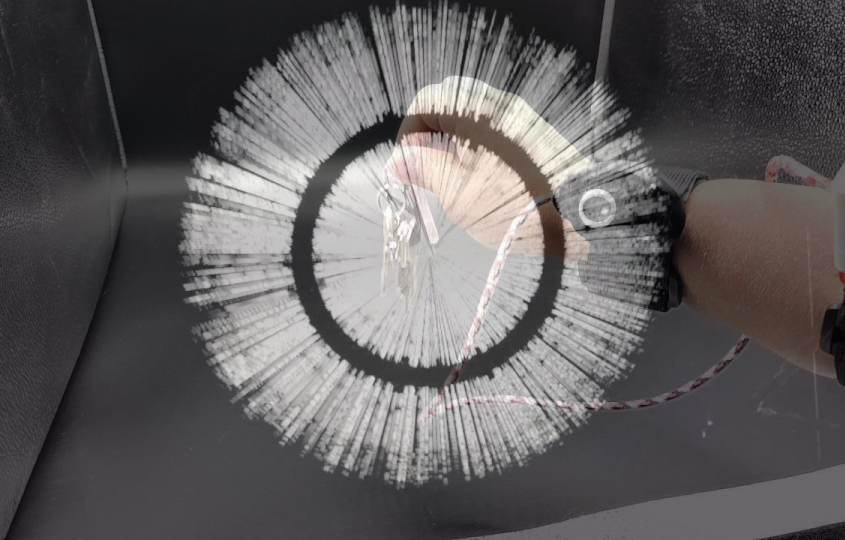

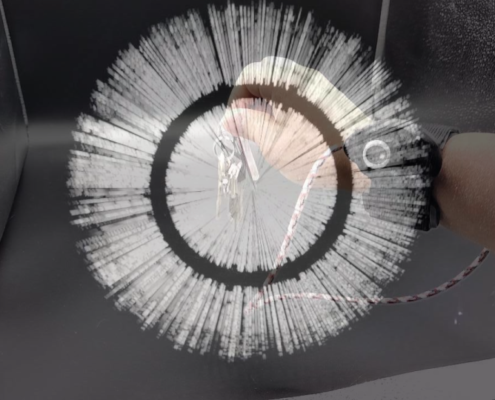

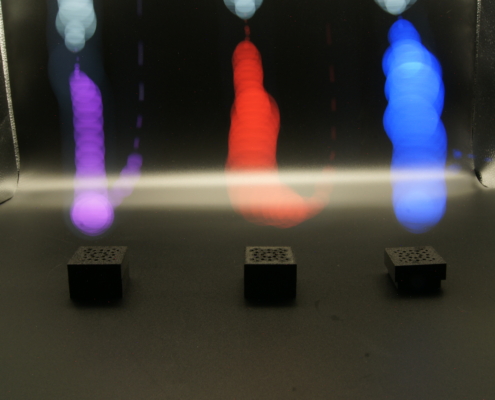

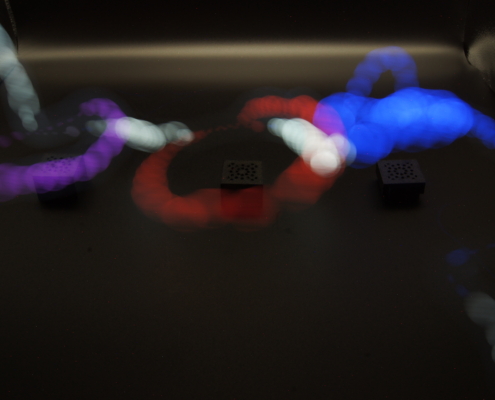

Selected Light Paintings:

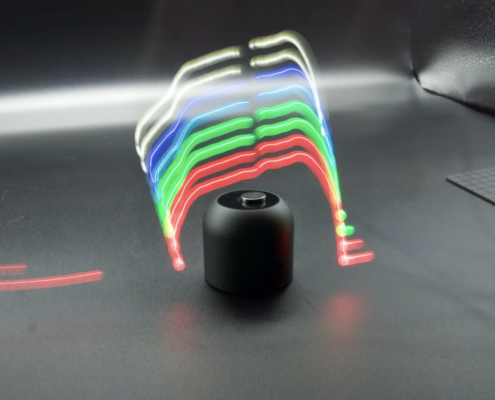

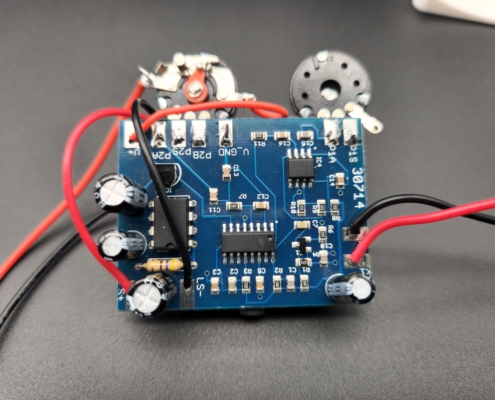

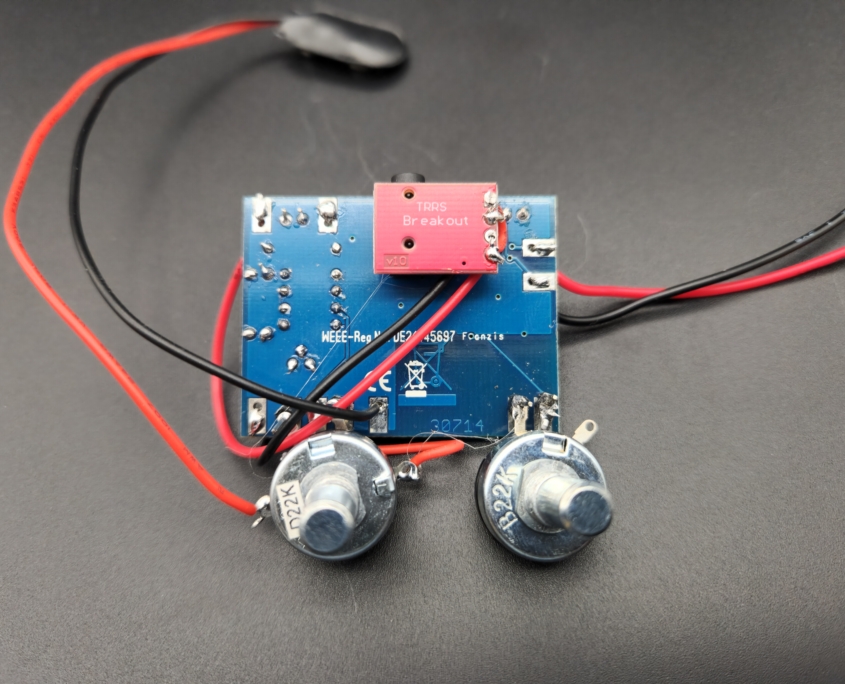

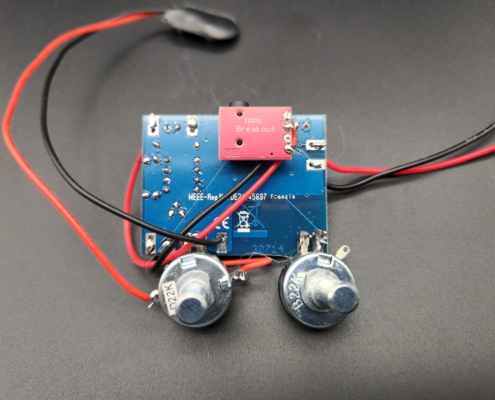

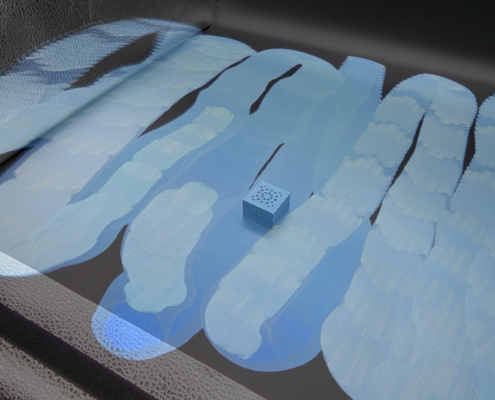

Exploration 2: Visualize Ultrasonic Frequencies With a Third Ear Wearable

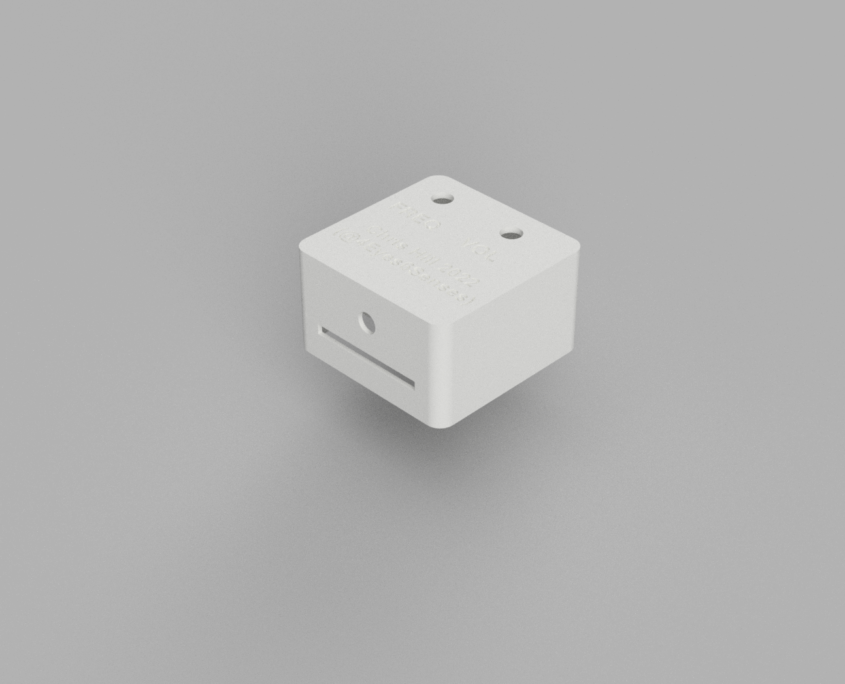

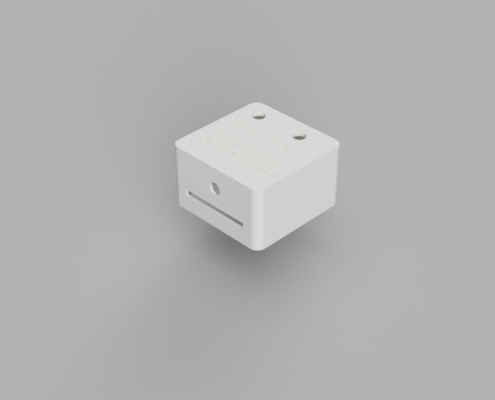

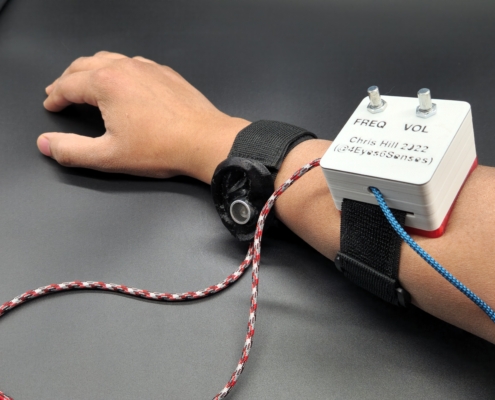

Introduction

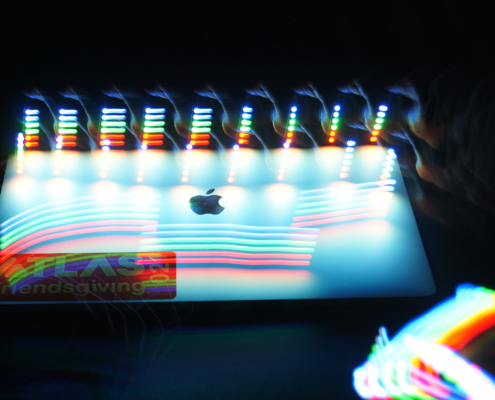

Continuing my interest in wearable augmentations that visualize phenomena, I designed a “third ear” that enables you to listen and/or visualize ultrasonic frequencies. For perspective, the normal frequency range for humans is around 20Hz to 20kHz. We start with a max hearing of 20kHz as babies and as adults our hearing declines to around 17kHz. With this third ear, you can detect frequencies between 20kHz to 100kHz, enabling brand-new sensing of your environment. You can hear the frequencies in real-time with a pair of wired headphones, or you can visualize the phenomena with a p5.js script. The device can also enable new perspective-taking, as you can hear frequencies similar to your dog (40kHz), your cat (64kHz), or a bat (100kHz).

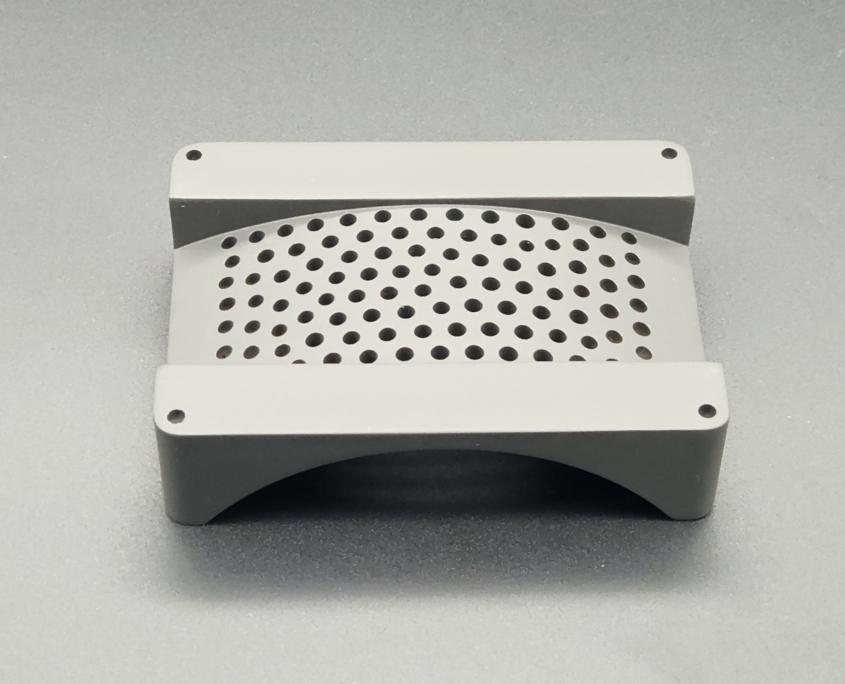

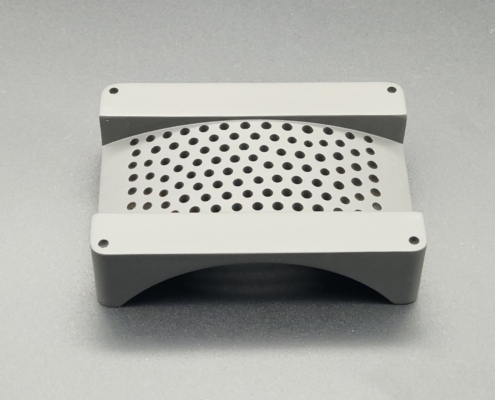

Housing:

Finished Device:

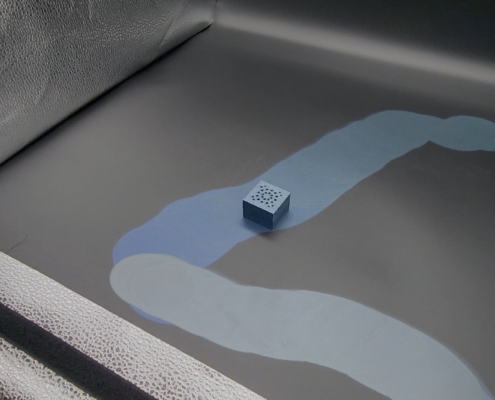

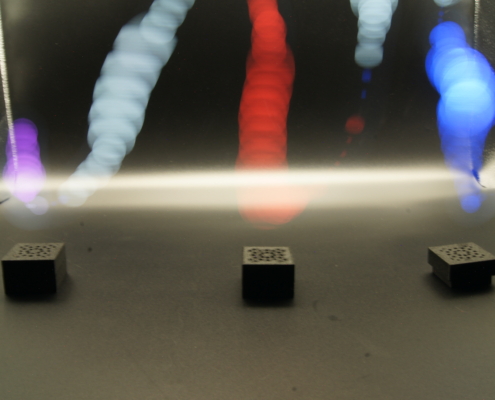

Selected Light Paintings:

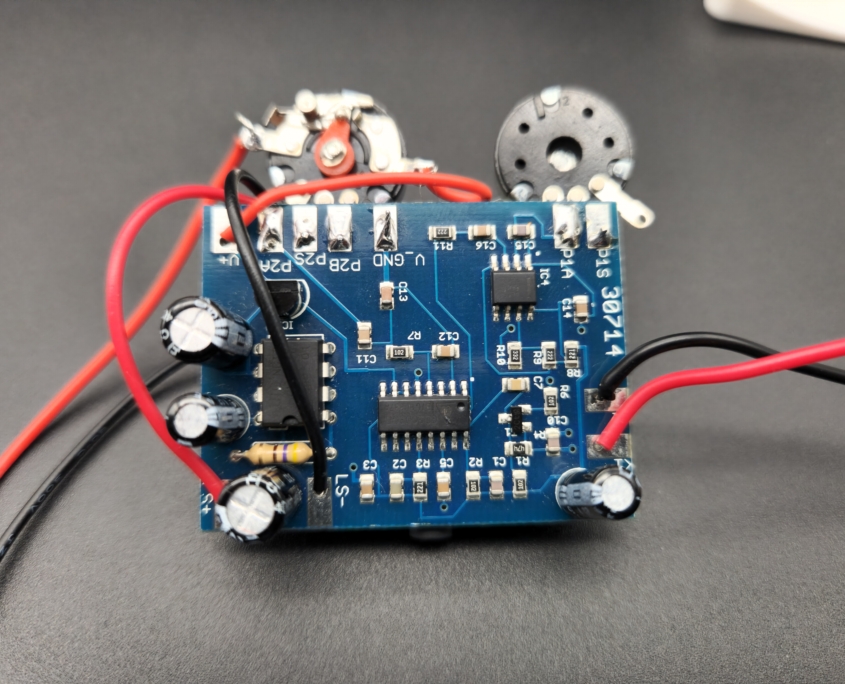

Exploration 3: Visualize AI Nose Classifications With a Light Painting Wearable

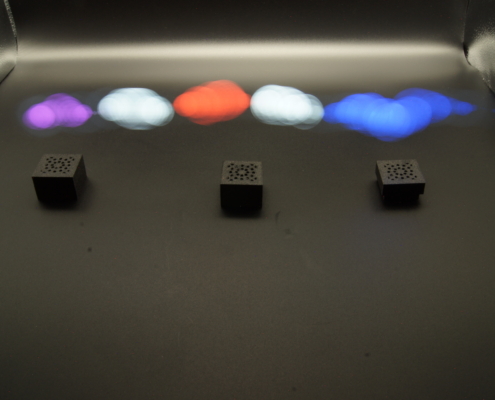

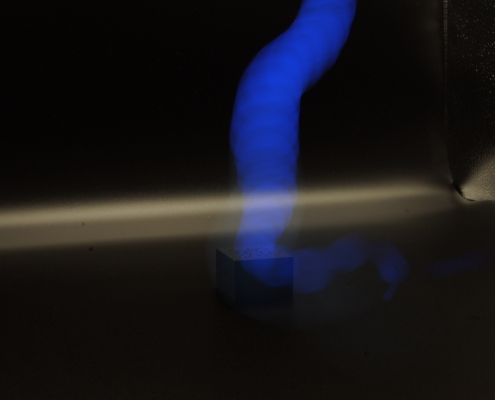

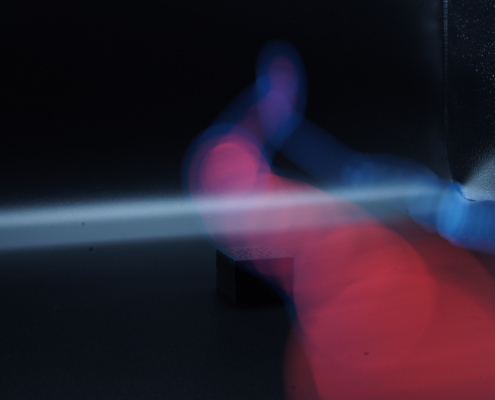

Introduction

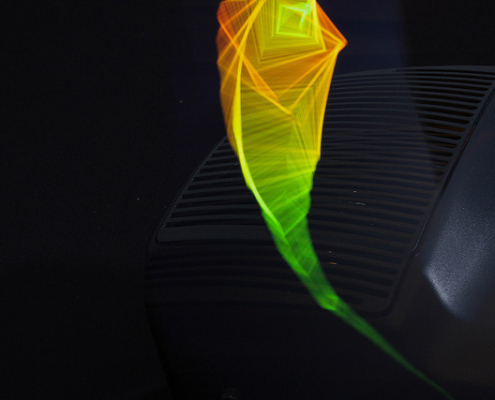

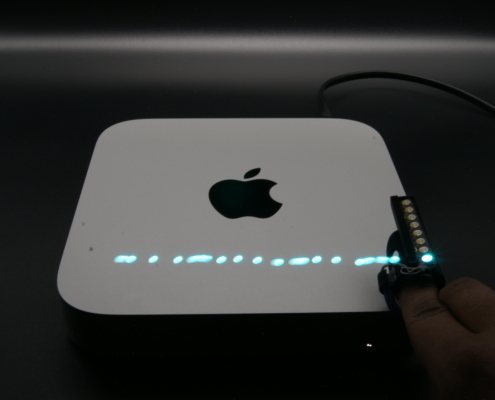

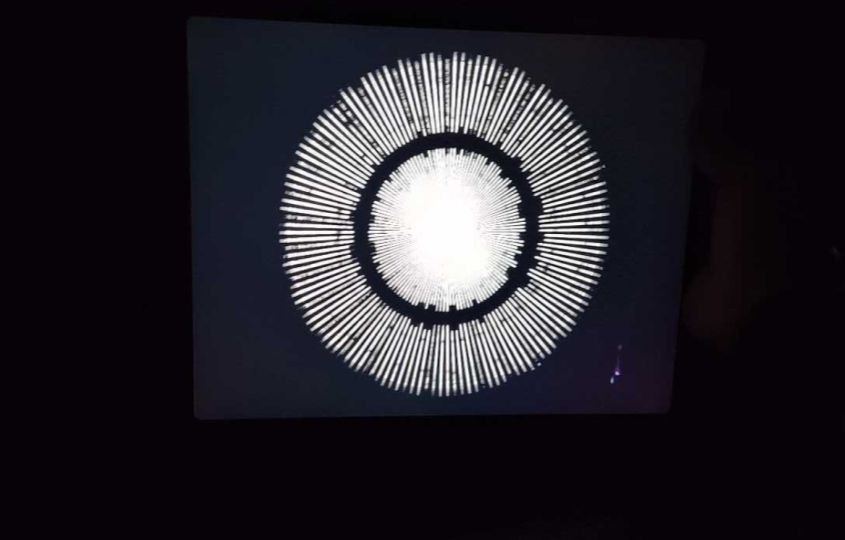

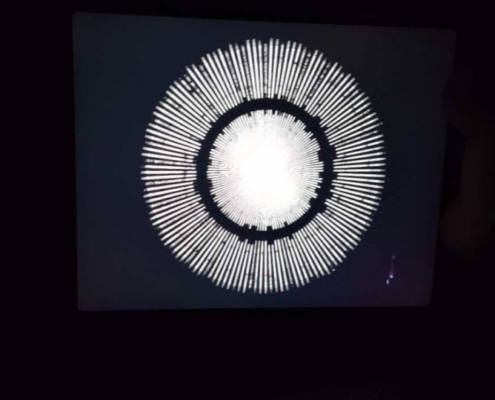

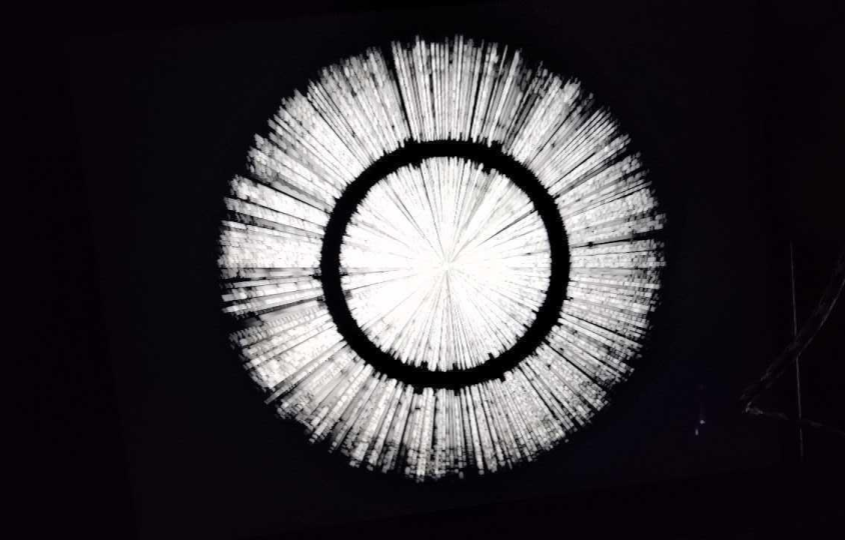

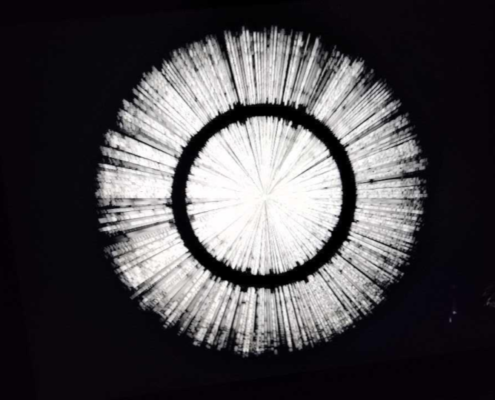

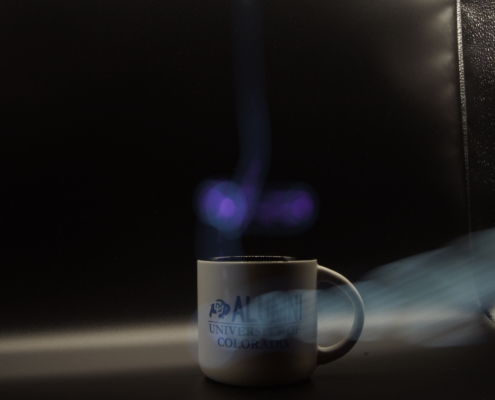

This project is a remix of: Benjamin Cabé’s Artificial Nose Project & Shawn Hymel’s Sensor Fusion AI Nose Project

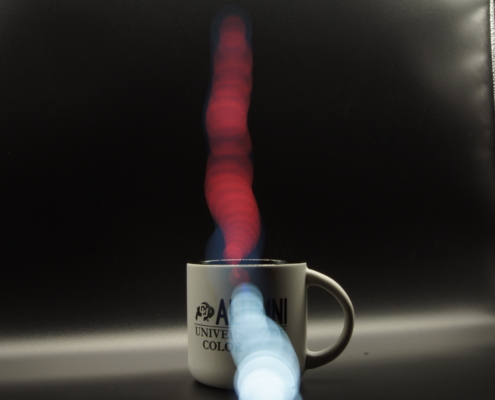

Inspired by these two projects, I wanted to create a system where two phenomena (smell and ML classification) could be visible. My approach is through light painting, where the TFT screen on the Seeed Studio’s Wio Terminal is photographed using long exposure to visualize the smells the AI nose detects and its confidence in the classification. The way it’s currently programmed the color changes based on the scent recognized, and the circle on the TFT gets bigger or smaller based on the model’s confidence in its classification (more confident = larger circle), but this can be reprogrammed to any mapping you like. For the video above, blue is cologne, and white is ambient room smell.

Housing:

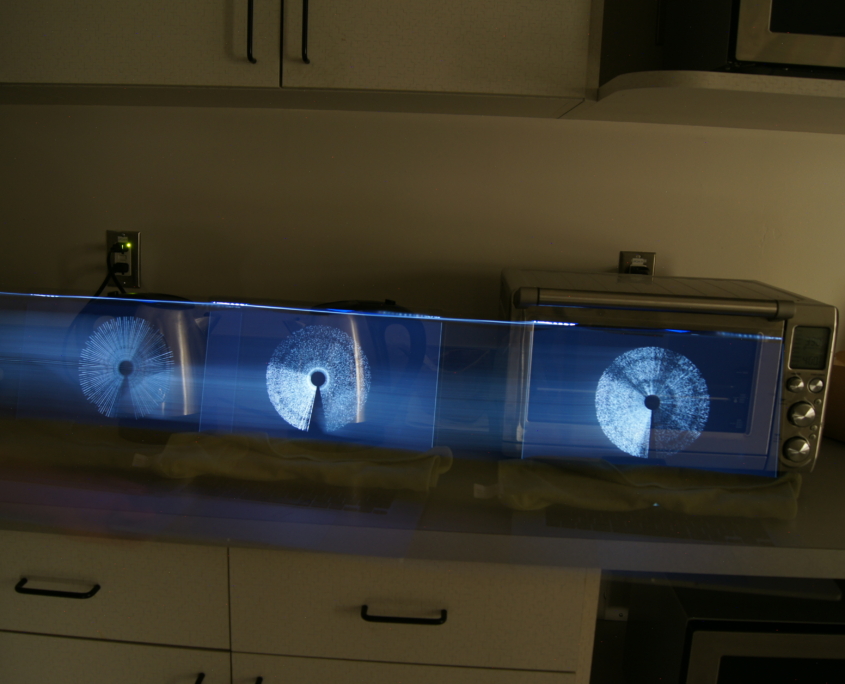

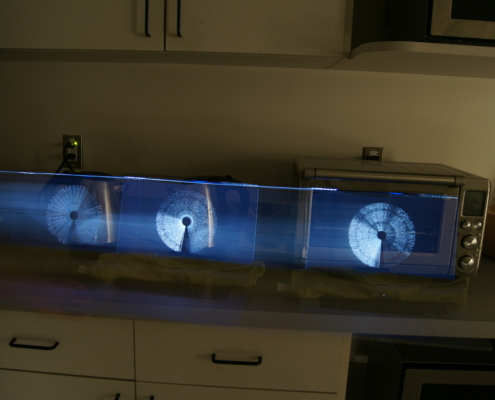

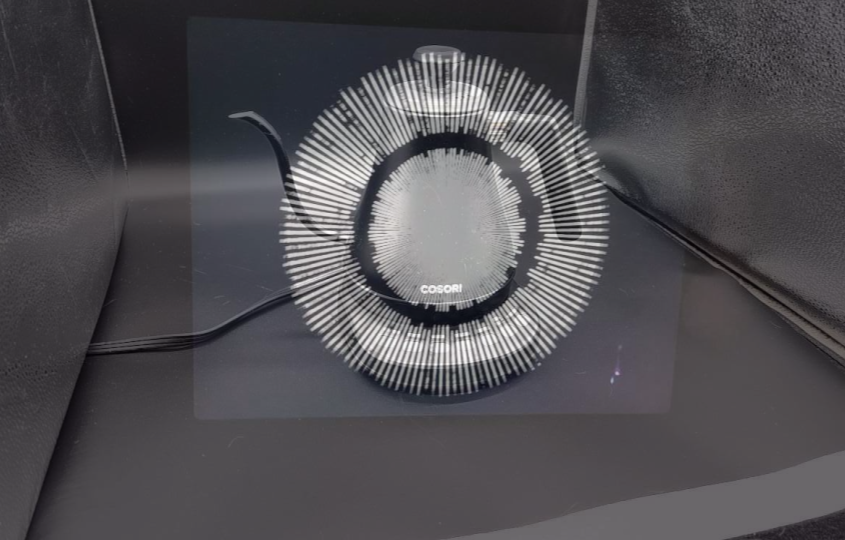

Finished Device:

Selected Light Paintings:

Selected Press:

2022 – Adafruit “Visualize and Hear Ultrasonic Frequencies With a Third Ear #WearableWednesday”

2022 – Hackster.io “Chris Hill’s ‘Third Ear’ Wearable Lets Your Hear — or See — in Ultrasonic Frequencies”

2022 – ARDUINO “Use light painting to visualize magnetic fields”

2022 – Hackster.io “Making Magnetic Fields Visible with Light Painting”

2022 – Digi-Key “A Nose for Art [Maker Update] | Maker.io”

2022 – Hackster.io “Visualizing Smells in a Room with an AI-Powered Nose and Light Painting”